Introduction to our Lab

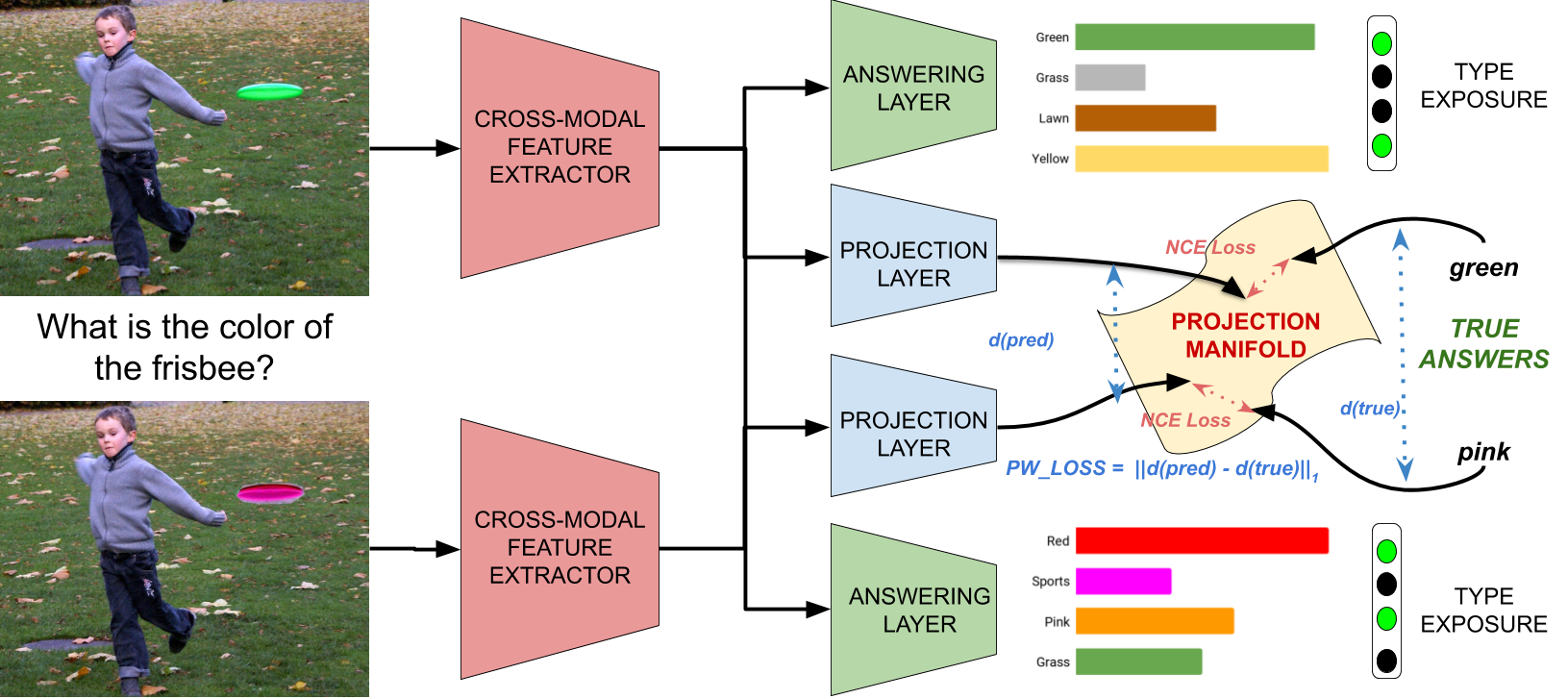

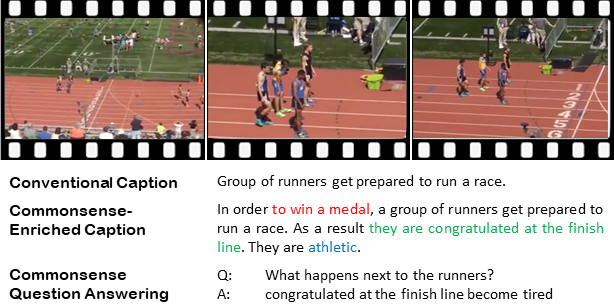

The current research focus of our lab is to build automated systems

(and to develop underlying methodologies and address associated

challenges) that can understand text, images, and videos.

We then apply that understanding to various

AI (Artificial Intelligence), Robotics and Human Centered AI domains

such as

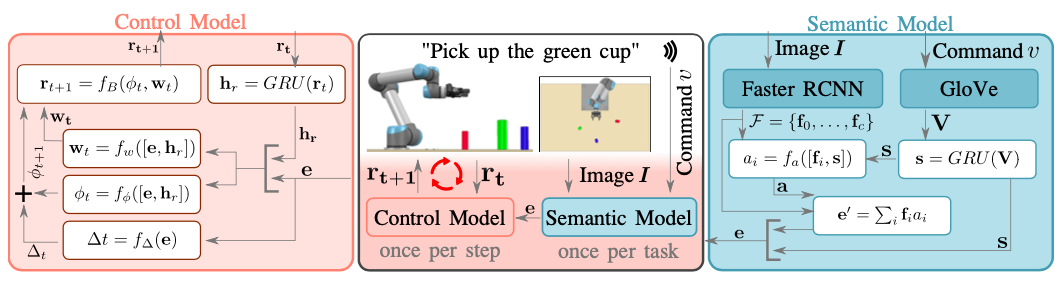

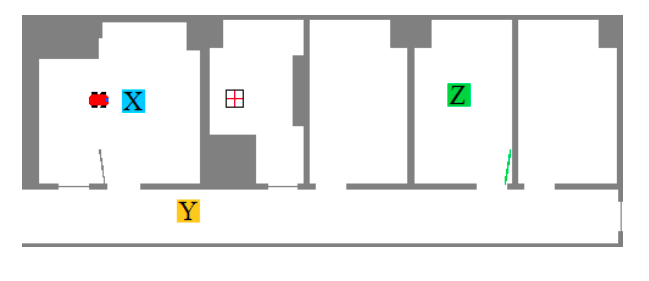

teaching robots through demonstrations and language instructions,

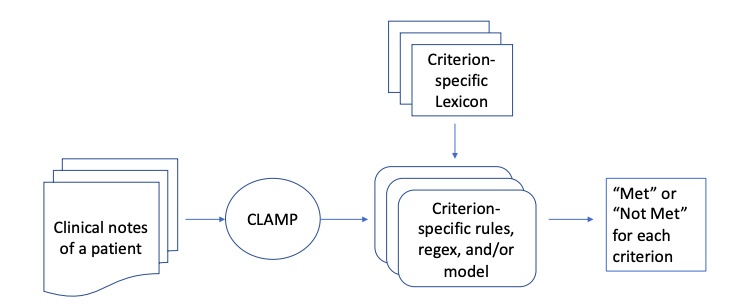

assisting health care,

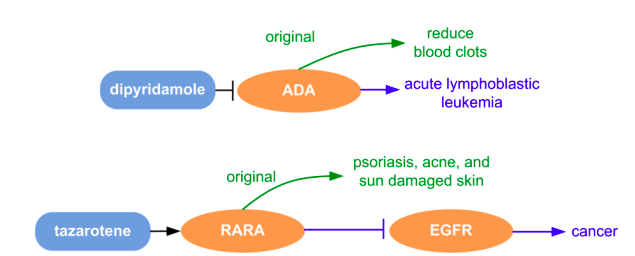

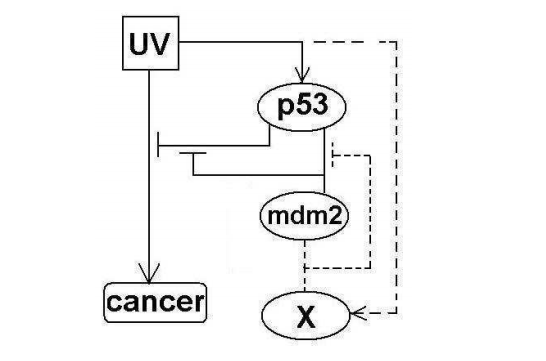

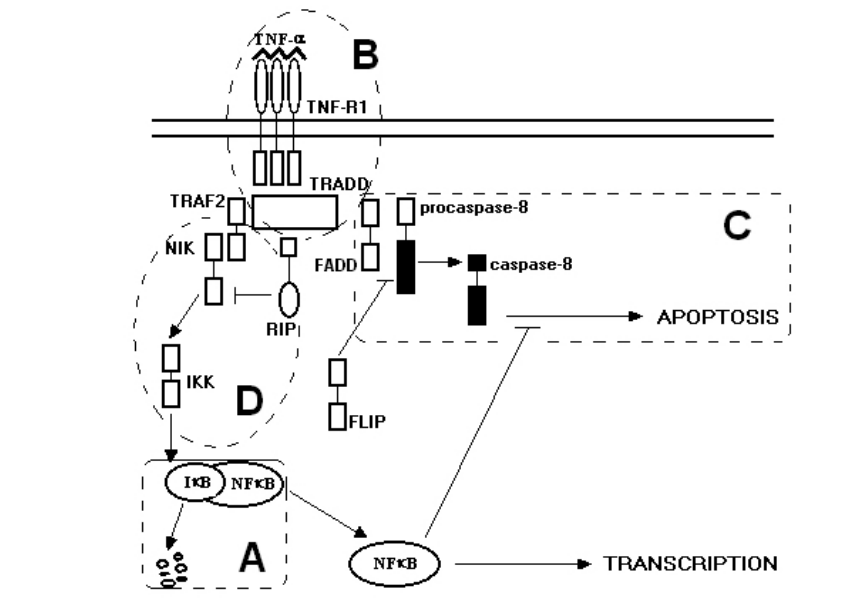

enabling scientific discovery through automated literature processing,

human-machine collaboration on difficult tasks such as software vulnerability detection, and

human robot collaboration.

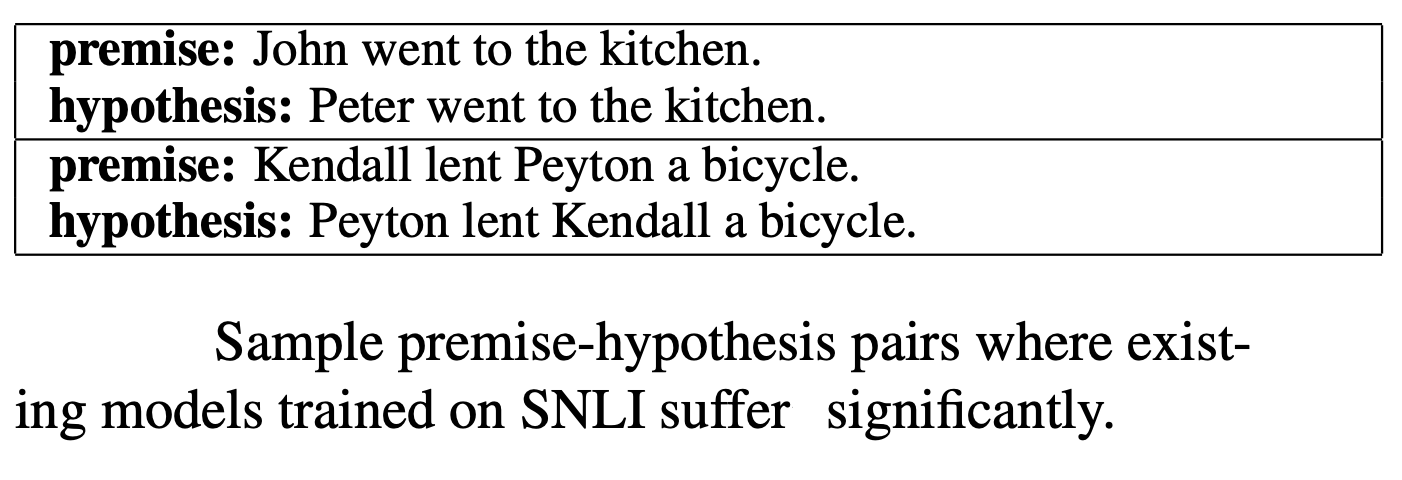

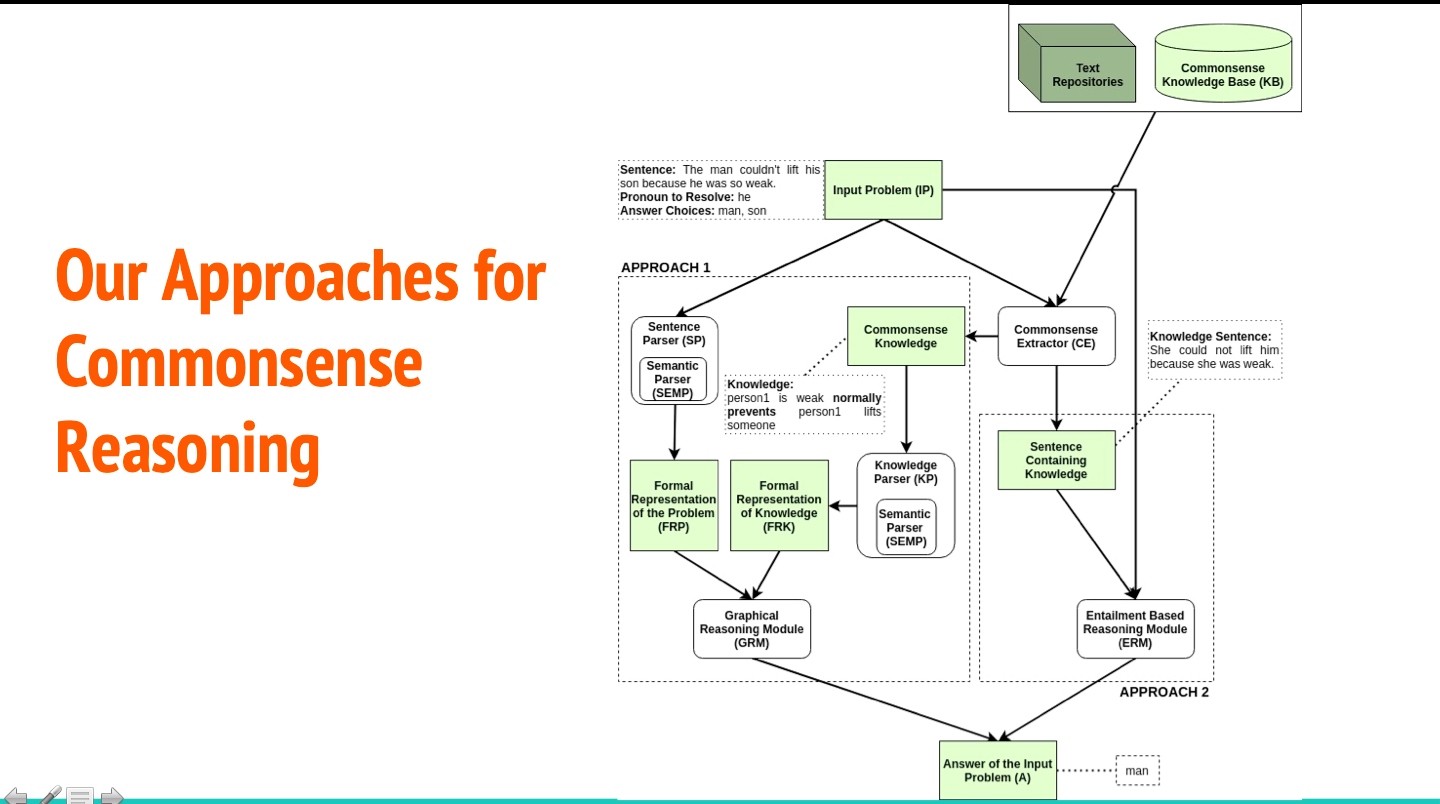

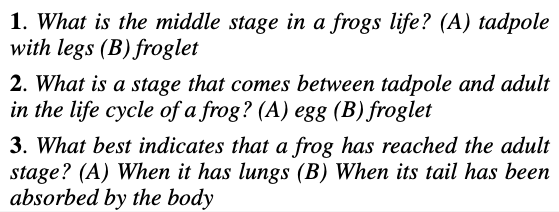

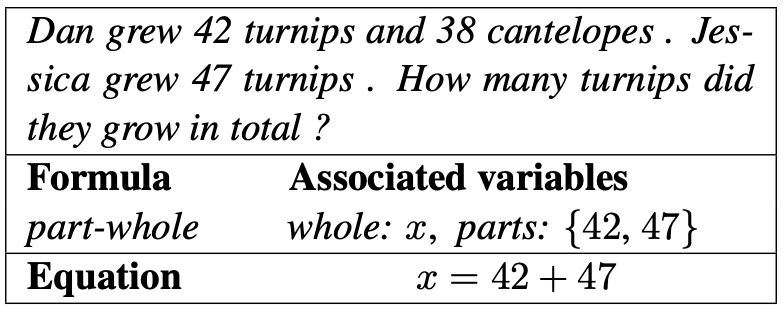

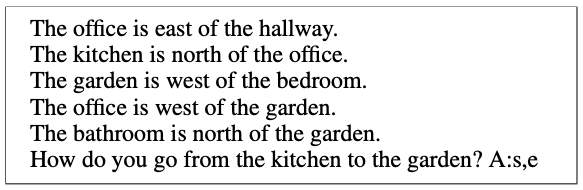

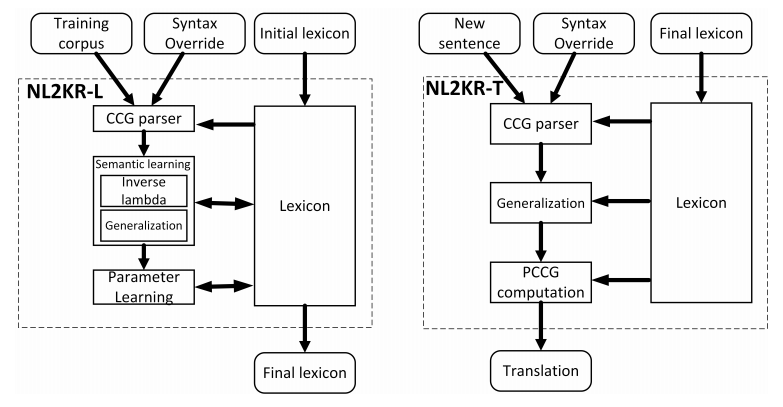

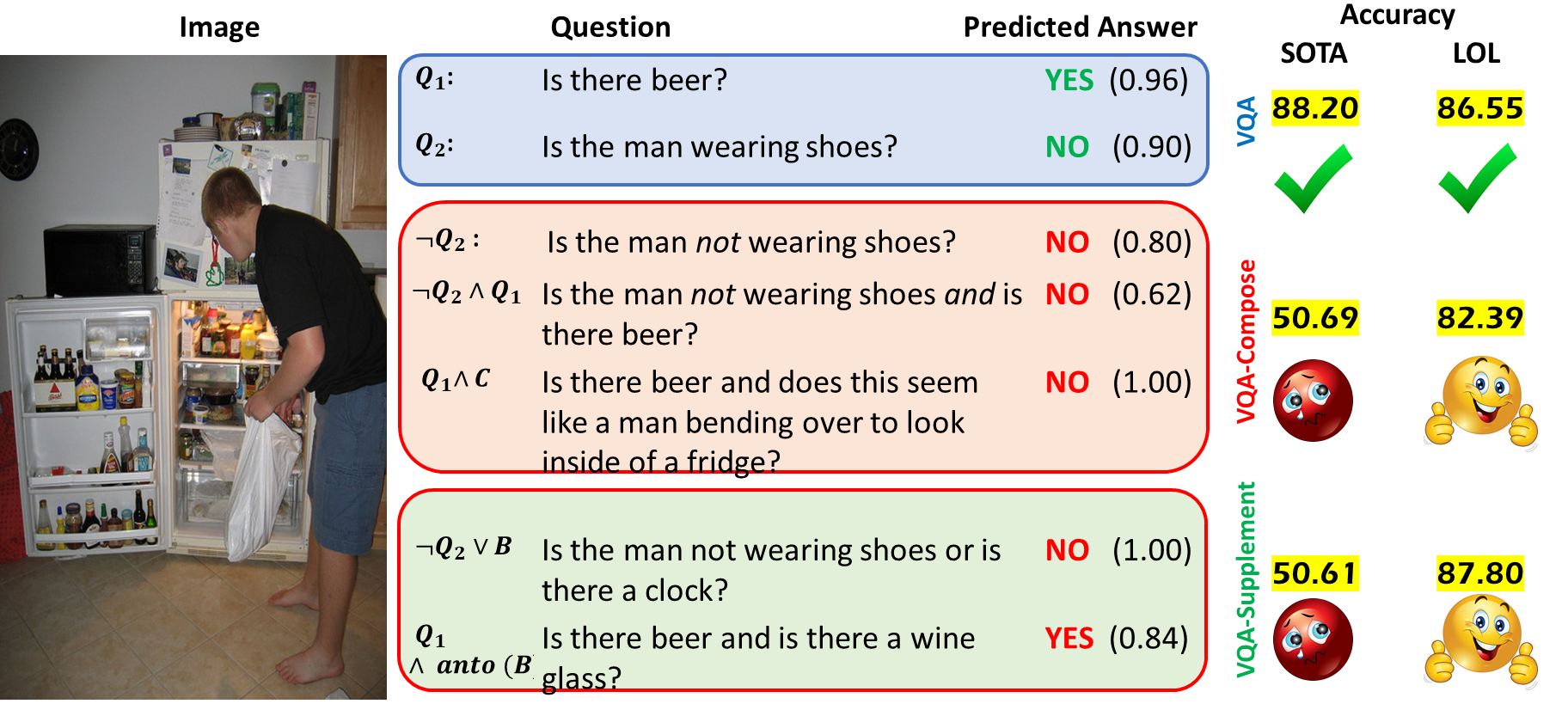

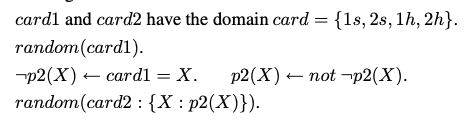

Our lab's focus and USP is to augment machine learning (including deep learning approaches) with knowledge and reasoning for the above tasks, as in most cases there is accumulated task-relevant knowledge and often it is important to use commonsense reasoning. In the pursuit of using knowledge and reasoning together with machine learning we are faced with several questions and challenges such as:

how to incorporate knowledge and reasoning into machine learning methods;

how to acquire knowledge, especially commonsense knowledge;

how to identify key aspects of commonsense knowledge;

how to figure out what knowledge is missing;

how to obtain knowledge from text;

how to figure out appropriate knowledge representation formalisms to use;

how to determine the appropriate knowledge learning approach to use;

how to use question answering datasets to acquire knowledge;

how to use crowdsourcing for knowledge acquisition; and

how to do reasoning in the face of mistake-prone knowledge extraction methods and in the absence of a unified knowledge representation formalism.

Our research falls under the general area of AI but currently has a special focus on Cognition. Hence the name of our lab.

We work closely with several other labs in CIDSE ASU. In particular, we have joint projects and/or joint publications with the Active Perception Group, Yochan Lab, Safecom lab, and Interactive Robotics Lab. Our external collaborators include: HRI Lab at Tufts, KLAP lab at NMSU, and Knowledge Based Systems Group at T U Wien.

Our lab's focus and USP is to augment machine learning (including deep learning approaches) with knowledge and reasoning for the above tasks, as in most cases there is accumulated task-relevant knowledge and often it is important to use commonsense reasoning. In the pursuit of using knowledge and reasoning together with machine learning we are faced with several questions and challenges such as:

Our research falls under the general area of AI but currently has a special focus on Cognition. Hence the name of our lab.

We work closely with several other labs in CIDSE ASU. In particular, we have joint projects and/or joint publications with the Active Perception Group, Yochan Lab, Safecom lab, and Interactive Robotics Lab. Our external collaborators include: HRI Lab at Tufts, KLAP lab at NMSU, and Knowledge Based Systems Group at T U Wien.

|

|

|

|

|