Research Narrative

Thus the research in KR&R was driven by the quest to find appropriate knowledge representation formalisms and reasoning mechanisms, and the research in AA&P was focused on formulating acting purposefully (agent behavior) and formulating various aspects of commonsense reasoning, in which reasoning about actions plays a central role. Our 2003 book chronicles our work as well as the substantial progress made in these two foundational areas. Since then while continuing working on these two foundational areas we have broadened our research to Cognition and Human Centered AI.

In continuation of our work on KR & R we developed KR&R formalisms that combine probability with logic, and formulated causal and counterfactual reasoning and naive conditioning using that. In continuation of our work on AA&P we formulated the notion of maintainability and develop a "specification-to-code" methodology to create policies for maintainability goals and for goals in non-deterministic domains. We developed various goal specification languages and more recently developed a high level language for the multi-agent domains that allow agents to reason about other agent's knowledge or lack of it.

In recent years our focus has been on building integrated AI systems. To that end we have tried to bridge the gap between learning and reasoning and have developed systems that combine both. We developed an incremental and iterative method for learning rules.

Cognition:

A crucial aspect of building integrated AI systems is cognition, defined (by Oxford Dictionary) as "the mental action or process of acquiring knowledge and understanding through thought, experience, and the senses." Our recent research has focused on cognition, especially with respect to understanding text and images. While in recent years purely neural approaches have been the goto methodology for both, we have focused on combining neural (and other machine learning methods) and reasoning with knowledge, and used question answering challenges as benchmarks to evaluate our approaches. We have focused on the hard challenges where knowledge (including commonsense knowledge) plays an important role.Human Centered & Use Inspired AI:

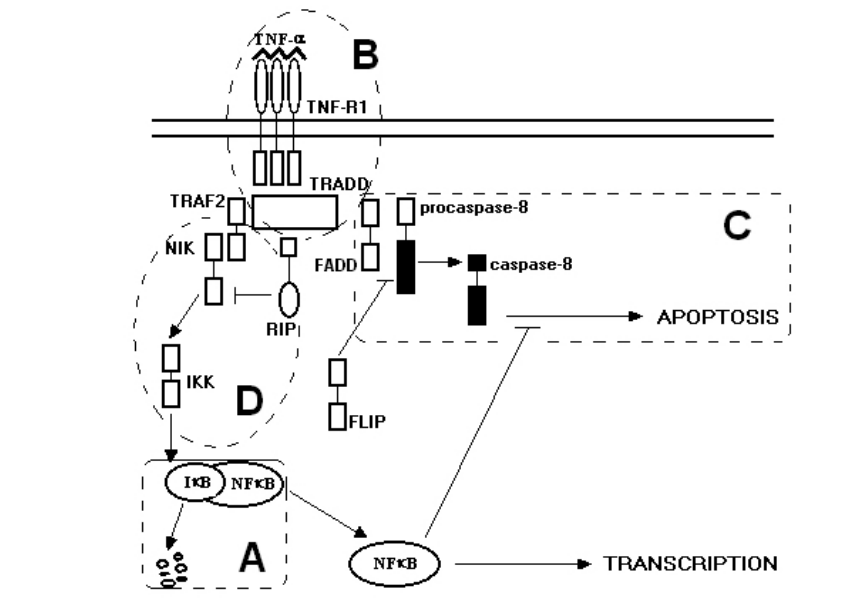

Robots and biological pathways were the initial domains that inspired us. Our robots in the AAAI 96 and 97 robot contests combined high level and low level reasoning. Since then we have worked on human robot interactions where humans communicate with robots using natural language to our recent foray on imitation learning where robots learn from demonstrations that include verbal commentary and instructions.Biochemical pathways inside cells regulate not just the cells but the organisms as a whole, and reasoning about them is needed to understand various phenomena, predict the impact of interventions (such as drugs), and design therapies. In addition, in the discovery phase, reasoning is needed to hypothesize missing knowledge. We developed new reasoning about actions methodologies to reason in such domains, developed various information extraction (from text) methods for this domain, and combined text extraction and reasoning with pharmacokinetic knowledge to predict drug-drug interactions and identify novel drug indications. Recently in collaboration with researchers at Mayo we have been working on understanding clinical notes and patient databases for various health care applications. This includes cohort selection using clinical notes that involves identifying criteria and phenotypes such as drug abuse, alcohol abuse, decision making ability, major-diabetes, and elevated creatinine. Some of our other ongoing research in this area includes information extraction from clinical notes, automated document generation (in particular, survivor care plans), social media analysis and evidence synthesis.

One of our most recent foray in use inspired AI is about vulnerability detection in program code using a man-machine collaboration approach.

Research Highlights

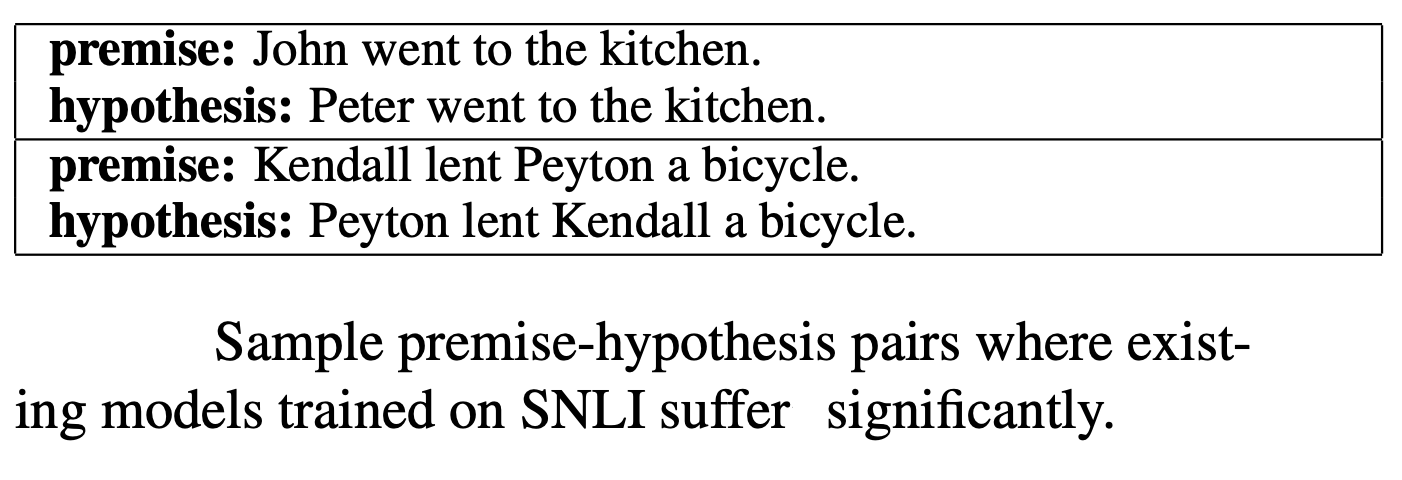

Improving Natural Language Inference

An enhanced data set that distinguishes different entities and different roles.

A new NLI model amenable to learning such distinctions.

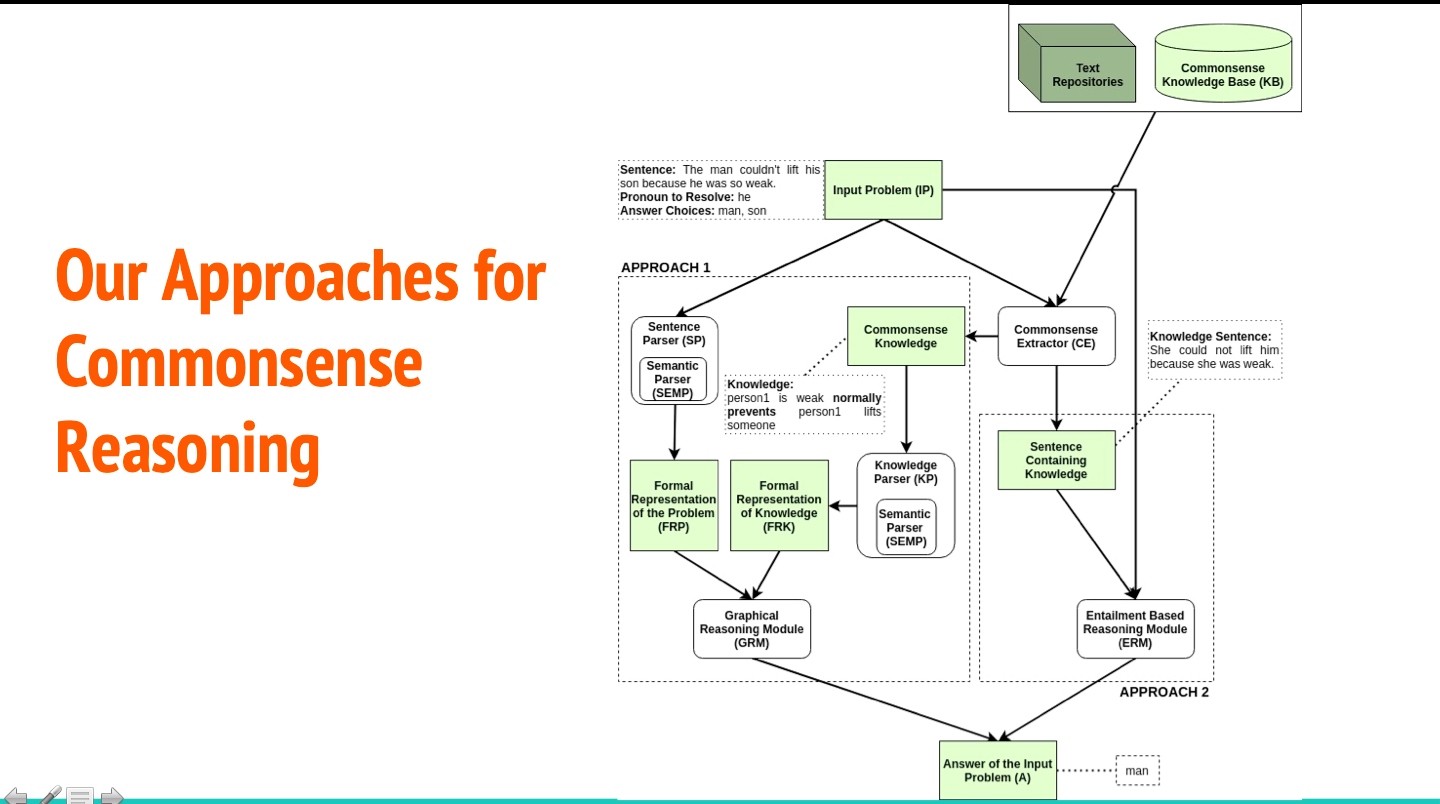

Knowledge Hunting & Neural Language Models for WSC

We combine Knowledge Hunting and Neural Language Models to Solve the Winograd Schema Challenge.

Event-Sequences from Image Pairs

A data set of image pairs consisting of block towers.

A neural model that learns and reasons to generate event sequences from such image pairs.

Integrating Knowledge and Reasoning in Image Understanding

Various approaches to integrate knowledge and reasoning for image understanding.

Open Book QA

Our system for Open Book Question Answering finds relevant knowledge from the open book, analyzes them to figure out what knowledge may be missing, looks for the missing knowledge

in a large knowledge repository (expressed in natural language) and then uses them to

answer the question.

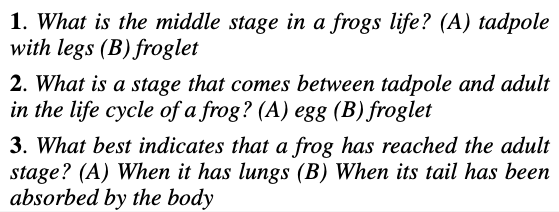

QA using ASP and NLI

Our system uses Answer Set Programming to formalize hard to learn notions often found in questions such as "indicates" and uses NLI (natural language inference) to identify the correct answer in a multiple-choice question answering setting where questions are to be answered with respect to knowledge expressed in textual form.

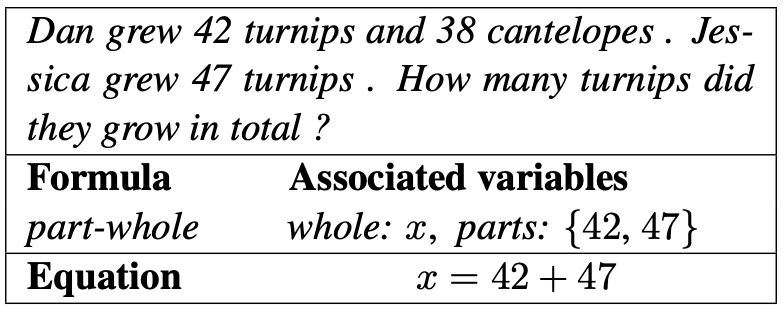

Solving simple word arithmetic problems

An approach that mimics humans solving such problems,

by finding the appropriate formula to be used and mapping

the word problem to elements in the formula. Both are

done together.

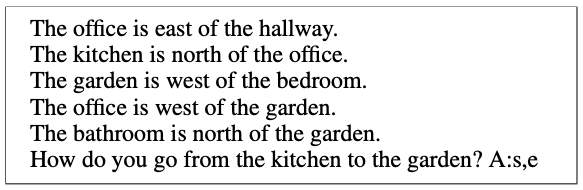

ASP based ILP to solve BaBI

Addressing the Facebook BaBI question answering challenge by

combining statistical methods with inductive rule learning and

reasoning.

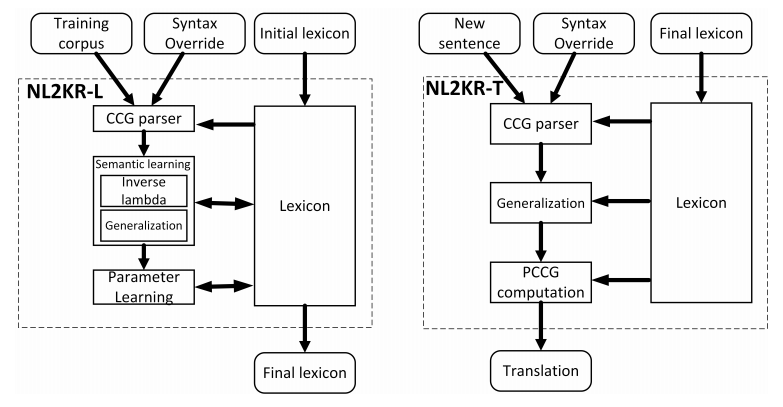

A Platform to build NL to KR translation systems

Our NL2KR (Natural Language to Knowledge Representation) platform

learns from a training set of English sentences and

their translation in a desired formal language and the lambda

expression of a small set of words. It creates a translation

system that can translate English text to the specified formal

language.

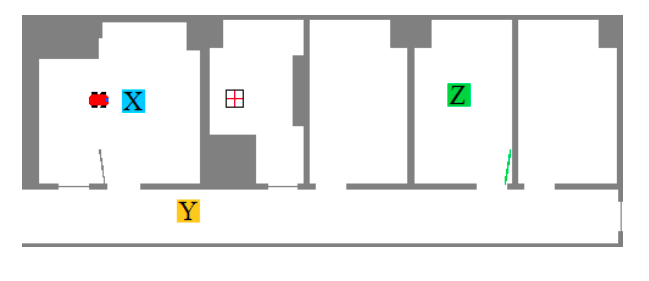

Spatial Knowledge Distillation to aid Visual Reasoning

A framework that combines recent advances in knowledge distillation (teacher-student

framework), relational reasoning and probabilistic logical languages to incorporate spatial knowledge in existing neural networks for the task of fact-based Visual Question Answering.

Reasoning using Neural architectures for VQA

We use an explicit reasoning layer on top of a set of penultimate neural network based

system for doing VQA (visual question answering). The reasoning layer adopts a Probabilistic Soft Logic (PSL) based engine to reason over a basket of inputs: visual relations, the semantic parse of the question, and background ontological knowledge from word2vec and ConceptNet.

Image Puzzle Solving using PSL

We compile a dataset of over 3k image riddles where each riddle consists of 4 images and a ground truth answer. We develop a Probabilistic Reasoning-based approach that combines

visual detection (including object, activity recognition) and probabilistic commonsense knowledge to answer these riddles with a reasonable accuracy.

Image understanding using Scene Description Graph

Visual recognition techniques and commonsense reasoning is used to create

Scene Description Graphs (SDGs) of images. An SDG is a a directed labeled graph, representing objects, actions, regions, as well as

their attributes, along with inferred concepts and semantic, ontological, and spatial relations. Utility of SDGs is demonstrated with respect to image captioning, image retrieval, and examples in visual question answering.

DeepIU: an Architecture for image understanding

We develop the DeepIU cognitive architecture for understanding images, through which a

system can recognize the content and the underlying concepts of an image and, reason and answer questions about both using a visual module, a reasoning module, and a commonsense knowledge base. In this architecture, visual data combines with background knowledge and; iterates through visual and reasoning modules to answer questions about an image or to generate a textual description of an image.

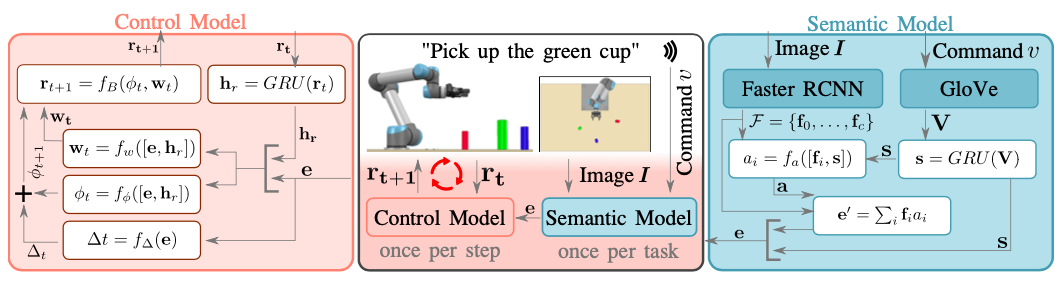

Imitation Learning: Combining Language, Vision and Demonstration

A neural method for imitation learning of robot policies that combines language, vision and demonstration.

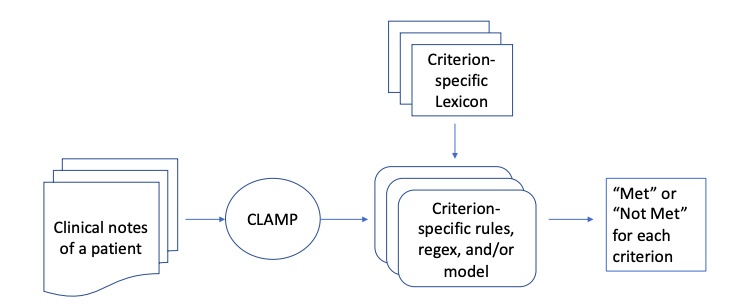

Cohort Selection from Clinical Notes

Automated development and use of special-purpose lexicons for cohort selection from clinical notes

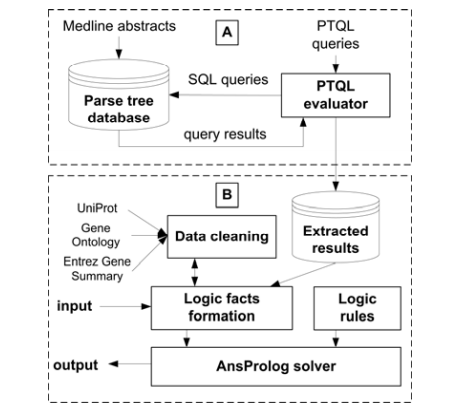

Discovering drug-drug interactions

Discovering drug-drug interactions by first using text mining

to extract elements of the pharmacokinetic pathway of drugs and

then using reasoning to figure out possible interference between

the pharmacokinetic pathways of two drugs.

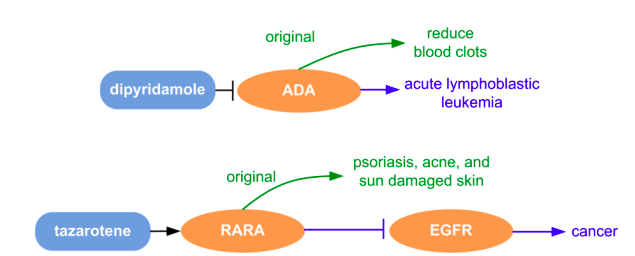

Identifying novel drug indications

Identifying novel drug indications by first extracting from text molecular effects caused by drug-target interactions and links to various diseases and then reasoning with them.

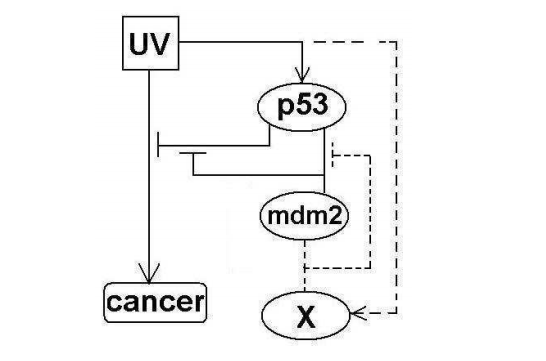

Hypothesis Formation in Biochemical Networks

Reasoning with existing knowledge about a biochemical network and observations to

hypothesize possible missing interactions in the network. Validation of the approach using the p53 network.

Representing and reasoning about cell signaling networks

A knowledge based approach for representing and reasoning about cell signaling networks.

Reasoning types include prediction effect of interventions, explaining observations and designing and verifying therapies (intervention sequences) with respect to a

desired behavior.

High Level Language for Human-Robot Interaction

A High Level Language for Human-Robot Interaction developed using

ideas from action execution languages and

grounded with respect to simulated human-robot

interaction transcripts.

Book: Knowledge representation, reasoning and declarative problem solving

A book about various building block results on one of most developed KR language "Answer Set Programming"

and its use in various reasoning tasks.

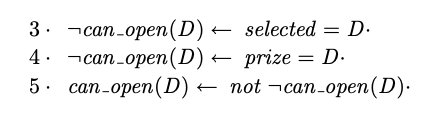

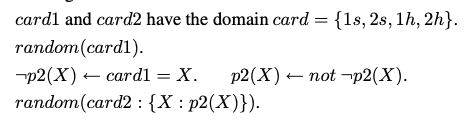

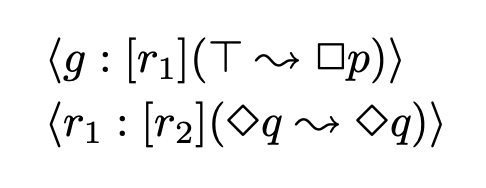

Probabilistic Reasoning with Answer Sets

Foundations of a language that combines probabilistic reasoning with answer set programming.

Using P-log for Causal and Counterfactual Reasoning and Non-Naive Conditioning

Using the Probabilistic Logic Programming Language P-log for Causal and Counterfactual Reasoning and Non-Naive Conditioning

Combining Multiple Knowledge Bases

Formulation of the notion of combining multiple knowledge bases in presence of constraints that the combined knowledge base must adhere. Knowledge bases that are logic programs, that are first order theories and that are default theories are considered in three separate papers.

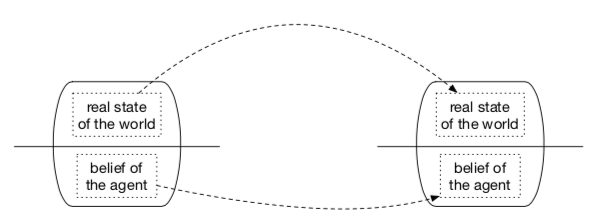

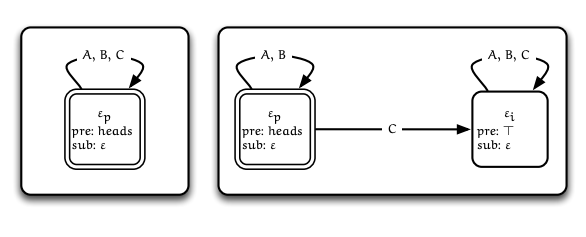

Formalizing sensing actions

Sensing actions, in the purest sense, are actions that do not change the physical world but change the agent's knowledge about the world. We define the notion of a knowledge-state and formalize the transition between knowledge states due to a sensing action.

Maintenance goals of agents

Formulate the notion of maintenance goals of agents in a dynamic environment and use a logic programming specification of policies that can satisfy maintenance goals to automatically construct desired policies.

Planning in Non-deterministic Domains

SAT and Logic Programming specification of the properties of

weak, strong, and strong cyclic plans in non-deterministic domains

leads to automatic construction of polynomial-time algorithms for such plans.

Elaboration Tolerant Revision of Goals

Development of non-monotonic temporal logics that facilitate elaboration tolerant revision of goals.

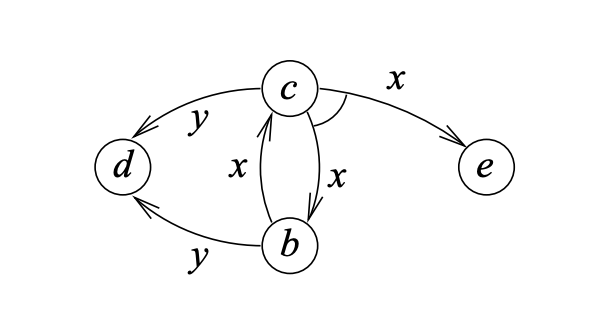

Modeling multi-agent scenarios involving agents' knowledge about other's knowledge

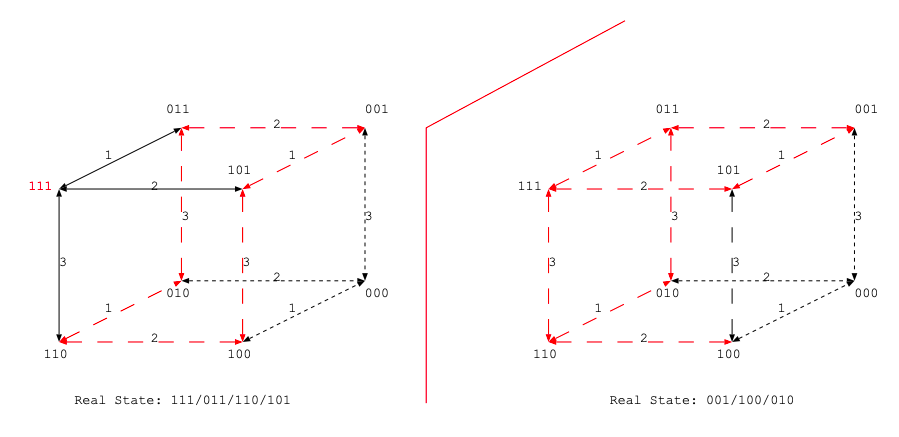

Using answer set programming to model multi-agent scenarios involving agents' knowledge about other's knowledge. We implement a new kind of action, which we call “ask-and truthfully-answer,” and show how this action brings forth a new dimension to the muddy children problem.

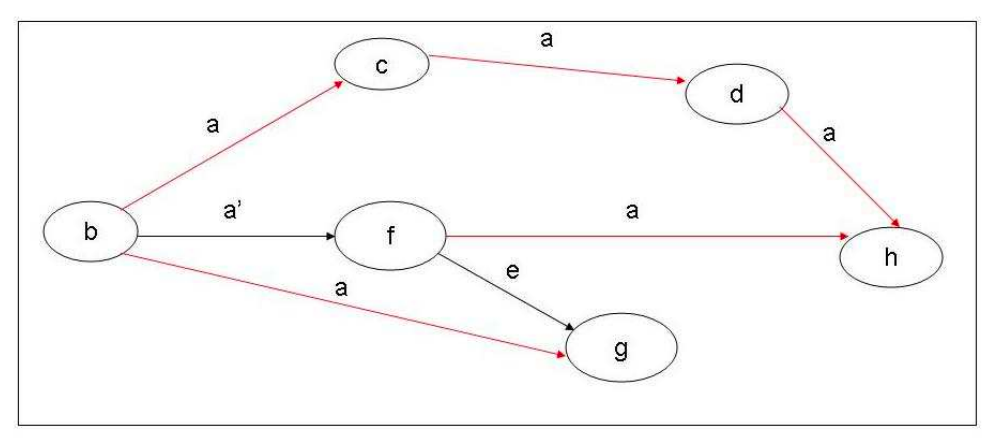

Multi-Agent Action Modeling using Perspective Fluents

In a multi-agent setting the effect of actions on agents' knowledge depends on the agents' perspective; whether they are aware of the action, and whether they are close enough to observe the impact of the action. We introduce the notion of perspective fluents

and formalize (effect of) multi-agent actions using them.

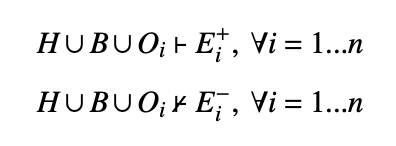

Incremental and Iterative Learning of Answer Set Programs

Enhancing the traditional inductive logic programming to allow large number of

(positive and negative) examples, each with its own background knowledge component.

Developing an incremental and iterative learning algorithm and system to learn

answer set programs from such mutually distinct examples.